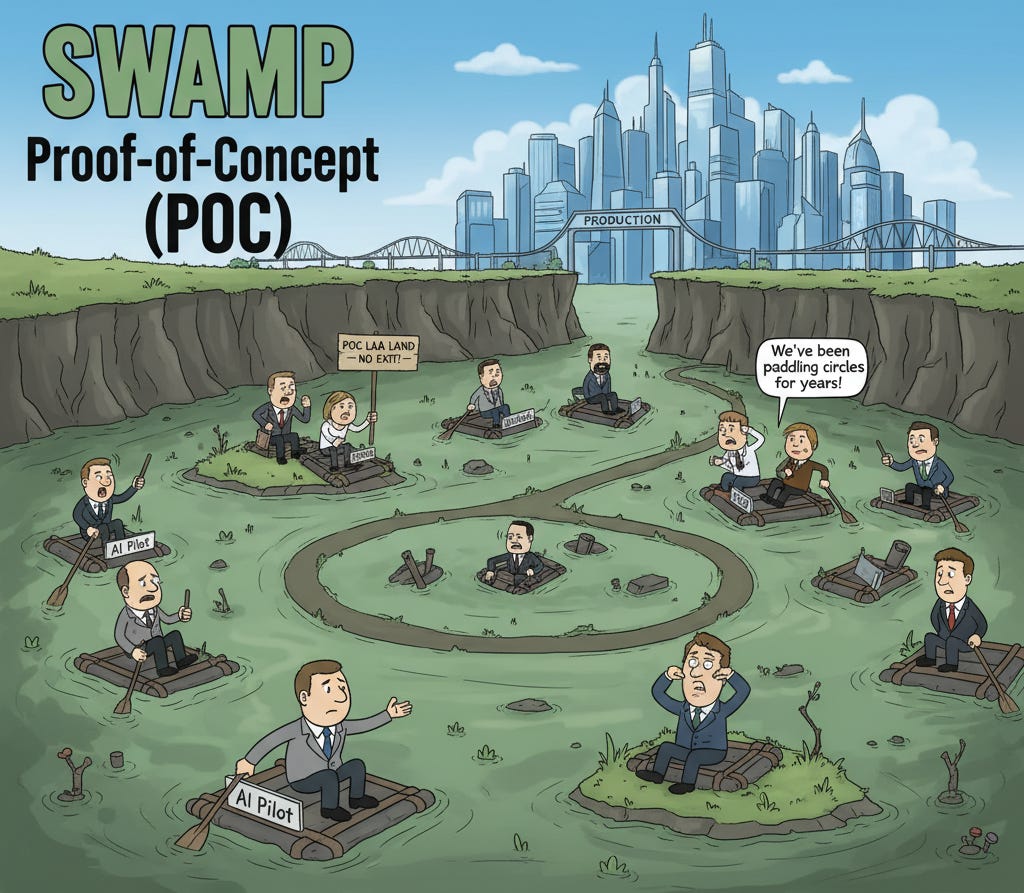

Escaping the AI “POC Swamp”

Why So Many Projects Stall and How to Ship What Matters

Organizations everywhere are experimenting with AI. Proof-of-concepts (POCs) spring up in labs, hackathons, and innovation teams. Yet for most companies, those prototypes never turn into production systems. They linger in “POC-land” or get quietly abandoned. This blog explains why that happens, what “production-ready” really means, how to build a playbook for moving from POC to production, and the anti-patterns to avoid.

Why POCs Stall – The Root Causes

Most AI prototypes are built under idealized conditions: a small, motivated team, clean samples of data, no long-term cost constraints. The moment you try to deploy them into a messy enterprise environment, problems appear. Surveys repeatedly show fewer than half of AI projects make it to production, and large portions of GenAI initiatives are paused or scrapped within a year. Here are the most common reasons:

Misalignment with business value.

Many teams build POCs because “we should do AI,” not because there is a clear, measurable business problem. Without a specific owner, baseline, and target metric tied to a budget, there is no way to prove value. When steering committees review funding, the project dies.Poor data quality and integration.

Data is often messy, incomplete, siloed or stale. A model trained on clean test data can break when it sees real-world distributions. Integrating multiple systems, dealing with latency, and keeping features up-to-date are major undertakings that get ignored during the POC stage.Technical and infrastructure gaps.

Enterprises frequently lack production-grade pipelines for training, deployment, monitoring and rollback. MLOps practices model registries, CI/CD for models, automated testing are missing. Without those, moving a model out of a notebook and into a live environment is slow and risky.Governance and compliance blockers.

POCs often bypass privacy, security, fairness, and audit requirements. Once legal or risk teams review the system, they impose new obligations that can take months to implement. Documentation, model cards, lineage, and red-teaming are rarely prepared up front.Overambitious or vague problem selection.

Some projects aim for technically hard problems that current methods can’t solve reliably. Others are so vaguely defined that scope creeps without bound. In both cases, the POC never meets expectations.Organizational and cultural factors.

AI systems rarely succeed without cross-functional collaboration. If business units, engineers, data scientists, and compliance officers aren’t aligned from the start, miscommunication slows everything. Change management, the training and process redesign needed to adopt the new system is often left for later and becomes a barrier.Hidden total cost of ownership.

Cloud inference, storage, retraining, human oversight, and monitoring costs can skyrocket compared to a sandbox environment. When decision-makers see the real bill for scaling, they cancel or postpone deployment.

What “Production-Ready” Really Looks Like

Before you commit to scale, you need to be honest: is the system production-ready? A truly production-ready AI project hits a series of minimum bars across several dimensions.

First, it is tied to a real business outcome. There is a clearly defined problem statement, a named business owner, a baseline, and a target metric for success. The project is not “AI for AI’s sake” but a tool to move a specific needle such as reducing churn, cutting costs, or improving risk detection.

Second, data readiness is in place. Clean, representative, labeled data flows from production systems into training and inference pipelines. Data quality service-level agreements, ownership, and lineage are documented. The team knows how to handle drift, schema changes, and data latency.

Third, there is an operational pipeline. Code, models, and prompts are version-controlled. Continuous integration and deployment processes are set up for models. Scalable training and inference infrastructure exists. Rollback mechanisms are tested. Monitoring and alerting are configured for latency, cost, and accuracy.

Fourth, governance and risk controls are embedded. Privacy impact assessments are complete. Bias, fairness, and safety have been evaluated. Model documentation limitations, data sources, evaluation metrics - is ready for audit. Security considerations such as adversarial input handling or prompt injection mitigation are addressed.

Fifth, the user and workflow integration has been tested. The system plugs into real workflows, handles exceptions gracefully, and has UI/UX or API stability. Human-in-the-loop processes are defined where needed.

Finally, cost and resource management are explicit. Compute, storage, and latency costs are estimated and budgeted. A cost cap or threshold is agreed. There is a plan to optimize usage as scale increases.

Without these elements, your “POC” is still a science project, not a product.

A Practical “POC-to-Production” Playbook

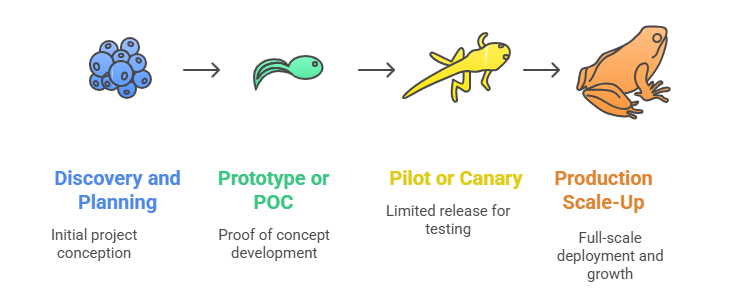

How do you actually get from an experiment to a production system? Below is a staged approach that has worked for many teams.

Stage 0 – Discovery and Planning

Spend two to four weeks upfront identifying and scoring potential use cases. Evaluate value versus feasibility: data readiness, technical risk, regulatory exposure. Assemble a cross-functional team including business, data science, engineering, and compliance. Define clear KPIs, baselines, and success criteria. Map data sources and compute resources. Draft a preliminary governance plan. Only proceed if the use case scores high on value and feasibility and has stakeholder buy-in.

Stage 1 – Prototype or POC

In four to six weeks, build a prototype on a realistic sample of data. Validate basic functionality and feature performance. Test for data issues such as missing values or distribution mismatch. Run initial evaluations of accuracy, latency, and bias. Start instrumenting monitoring and logging. Estimate costs of training and inference at scale. This phase should already mimic production conditions where possible, rather than relying on idealized test sets.

Stage 2 – Pilot or Canary

Next, deploy to a limited production audience or workflow. Observe behavior in the “wild” with real data and users. Monitor latency, throughput, reliability, and error handling. Run drift and distribution-shift detection. Collect user feedback and exception cases. Ensure compliance obligations are met and documentation is complete. Prepare rollback or fail-safe plans. This stage gives you real evidence of business value and operational viability.

Stage 3 – Production Scale-Up

Once the pilot meets its gates, roll out across the broader use case. Automate pipelines for retraining and feature updates. Build observability dashboards for performance, cost, and drift. Define and monitor service-level objectives for latency, quality, and cost. Train staff, update processes, and integrate the system fully into workflows. Maintain a plan for monitoring and incident response. Keep evaluating ROI and iterating on the model and process.

At every stage, have explicit “go / no-go” gates. If the system fails to hit metrics or exceeds cost thresholds, pause or pivot instead of pushing blindly forward.

Anti-Patterns to Avoid

As important as a playbook is knowing what not to do. Here are the common anti-patterns that doom AI projects:

“Sandbox heroics” with no path to production.

Building a beautiful model in a notebook that no one can deploy or maintain is a dead end. If you can’t describe how the model will run, be monitored, and be retrained in production, you’re still in science-project mode.

Chasing hype instead of solving a problem.

Jumping on the latest large language model, agentic AI, or generative tool without linking it to a specific business outcome is a recipe for disappointment. Technology should be a means, not an end.

Skipping governance until later.

Privacy, fairness, and audit controls need to be designed in from day one. Retrofitting them after a prototype is built often requires massive rework or kills the project.

Underestimating cost and operational complexity.

POCs often ignore the real-world cost of inference, storage, and human oversight. When costs are revealed at scale, budgets evaporate. Plan cost envelopes early and make them part of your go/no-go criteria.

Neglecting change management.

If users, operations, and business owners aren’t engaged early, adoption will fail. Training, process changes, and feedback loops are harder than building the model but essential for success.

Having weak metrics or no success gates.

Without clear baselines and targets, you can’t prove your POC adds value or decide whether to move forward. Set explicit acceptance thresholds before you write code.

Gold-plating the prototype.

Trying to handle every edge case or tune for 99.9% accuracy before you’ve proven basic effectiveness wastes time and resources. Build a thin slice that solves a core use case well, prove value, then iterate.

Conclusion

Escaping the “POC swamp” isn’t just about better algorithms. It’s about discipline: picking the right problems, designing for production from the start, embedding governance and cost control early, and moving through clearly defined phases with clear gates.

If you start with business outcomes, ensure data and infrastructure readiness, integrate governance and monitoring, and adopt a phased playbook, your AI initiatives will have a far higher chance of moving from flashy prototypes to reliable systems delivering real impact.

And perhaps most importantly, don’t fall in love with the prototype. Fall in love with shipping value.

About the Author

I’m Sagar Nikam, an AI Product Leader who writes about AI and product strategy, real case studies, and practical tips. If you enjoyed this piece and want more hands-on insights into making AI work in the real world, subscribe to my weekly newsletter for curated news, frameworks, and lessons from the field.